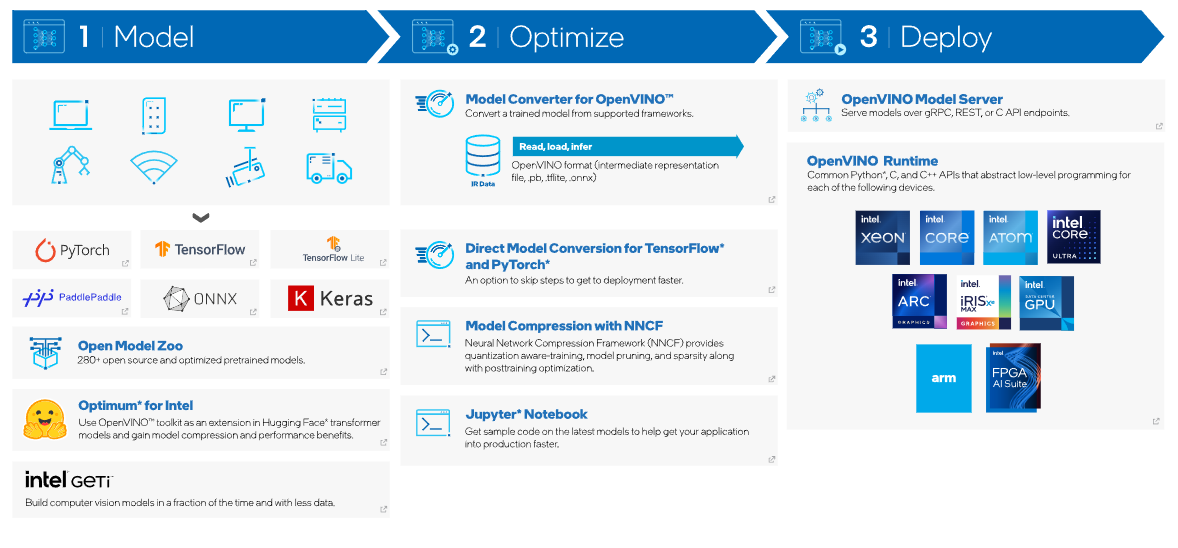

OpenVINO™ 툴킷은 정확도를 유지하고, 모델 공간을 줄이고, 하드웨어 사용을 최적화하는 동시에 더 낮은 대기 시간과 더 높은 처리량으로 AI 추론을 가속화하는 오픈 소스 툴킷입니다. 컴퓨터 비전, 대규모 언어 모델, 생성 AI와 같은 영역에서 AI 개발 및 딥 러닝 통합을 간소화합니다.

TensorFlow 및 PyTorch와 같은 널리 사용되는 프레임워크를 사용하여 훈련된 모델을 변환하고 최적화합니다. 온프레미스와 온디바이스, 브라우저 또는 클라우드에서 인텔® 하드웨어와 환경이 혼합된 환경에 배포하세요.

Benchmark Tool

Dataset Management Framework

Model Optimizer

Neural Network Compression Framework

Open Model Zoo

OpenVINO Model Server

Training Extensions

| Product | Details |

|---|---|

| TensorFlow | Improved out-of-the-box experience for TensorFlow sentence encoding models through the installation of OpenVINO tokenizers. |

| Hugging Face | We're making it easier for developers by integrating more OpenVINO features with the Hugging Face ecosystem. Store quantization configurations for popular models directly in Hugging Face to compress models into an int4 format while preserving accuracy and performance. |

| Feature | Details |

|---|---|

| Model Coverage | New and noteworthy models validated: Mistral, StableLM-tuned-alpha-3b, and StableLM-Epoch-3B OpenVINO now supports Mixture of Experts (MoE), a new architecture that helps process more efficient generative models through the pipeline. |

| Performance Improvements for LLMs | Experience enhanced LLM performance on Intel® CPUs, with internal memory state enhancement, and int8 precision for a key-value (KV) cache. Specifically tailored for multiquery LLMs like ChatGLM. |

| Neural Network Compression Framework (NNCF) | Improved quality on int4 weight compression for LLMs by adding the popular technique, Activation-aware Weight Quantization, to the NNCF. This addition reduces memory requirements and helps speed up token generation. |

| Product | Details |

|---|---|

| Arm Hardware Support Updates | Improved performance on Arm platforms by enabling the Arm threading library. In addition, we now support multi-core Arm platforms and enabled FP16 precision by default on macOS. |

| Intel Hardware Support | A preview plug-in architecture of the integrated NPU as part of Intel® Core™ Ultra processor (formerly code named Meteor Lake) is now included in the main OpenVINO toolkit package on Python Package Index (PyPI). |

| OpenVINO Toolkit Model Server | New and improved LLM serving samples from OpenVINO model server for multibatch inputs and retrieval-augmented generation (RAG). |

| JavaScript API | A preview plug-in architecture of the integrated NPU as part of Intel® Core™ Ultra processor (formerly code named Meteor Lake) is now included in the main OpenVINO toolkit package on Python Package Index (PyPI). |

| 번호 | 제목 | 작성자 | 등록일 | |

|---|---|---|---|---|

| no contents. | ||||